Safety Kit for LiDAR perception and data fusion.

Safety Kit

The core product of LFT is our comprehensive Safety Kit. It ensures reliable safety for automated driving through environmental perception using rule-based algorithms and data fusion of various sensors.

Functions

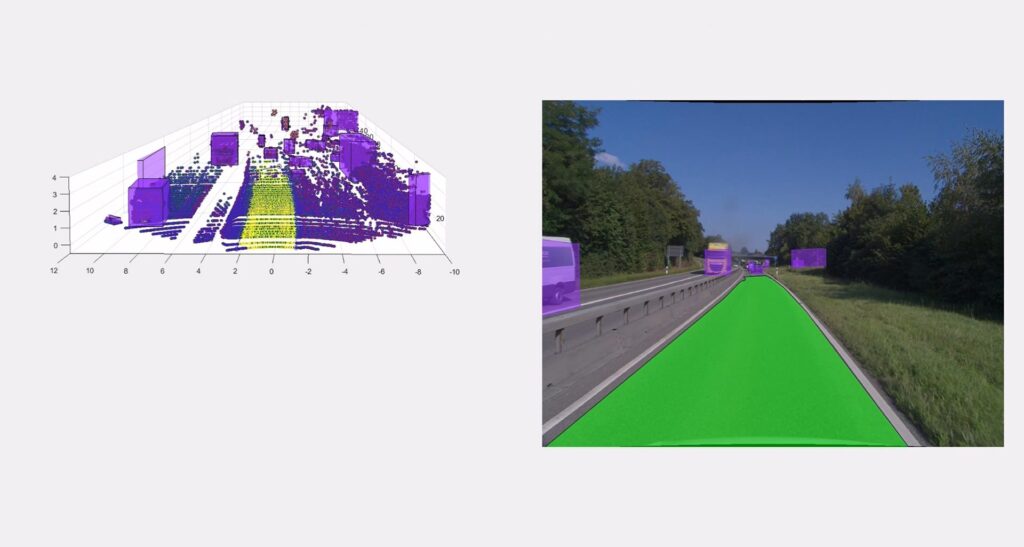

Generation of pure LiDAR-based environmental perception models

Rule-based methods combine features and objects from (LiDAR) data processing

Data quality

Core Benefits

The Safety Kit paves the way for SAE Level 4 approval for autonomous vehicles

The Safety Kit enables safety levels up to ASIL D (SOTIF & ISO26262)

LFT makes autonomous driving as safe as flying and brings it to the certification level

ASPP TigerEye® (Advanced Sensor Perception Processing) - LiDAR Perception

A modular software suite for processing LiDAR data. Based on LFT’s expertise in filtering, segmentation, and classification of LiDAR data in the aviation industry, this product exclusively uses classical deterministic algorithms.

ASPP TigerEye® comprises 8 main modules covering the complete LiDAR data processing chain for environment perception and monitoring:

- TE-1: LiDAR sensor data filtering to eliminate artifacts from LiDAR depth images caused by receiver noise, sunlight, haze, or scattering.

- TE-2: Free Space Detection: Identifies the drivable space in front of the vehicle by segmenting the ground and elevated objects.

- TE-3: Detection of lanes from the LiDAR sensor.

- TE-4: Segmentation, clustering, and tracking of 3D objects.

- TE-5: Offline/Online Calibration: Automated camera image rectification and alignment between LiDAR and camera.

- TE-6: LiDAR Detection Performance Monitoring: Real-time monitoring of sensor range, identification of degradation levels, and delivery of CBITs for LiDAR sensors.

- TE-7: Detection of relevant small obstacles.

- TE-8: Dynamic Collision Warning: Predicting the trajectory of dynamic objects to avoid collisions.

All modules are separately available at LFT and can be flexibly adapted to various LiDAR sensors and their data formats.

ADFS MentisFusion® (Advanced Data Fusion System) – Data Fusion

ADFS MentisFusion® combines deterministic, rule-based algorithms for preprocessed data such as 2D structures, 3D objects, and segmented 3D data points from various data sources. These data sources can include camera or LiDAR data, as well as information from databases (including cloud-based data) and radar information.

Modules of the ADFS MentisFusion® product line:

- MF-1: Lane-Marker Fusion: This software combines lane marking information from various sensors. Fusion techniques analyze and combine lane marking hypotheses when matches exist. If no match exists but there are no conflicts with other hypotheses, further analyses are conducted. The goal is precise and reliable lane detection.

- MF-2: Freespace Detection: The MF-2 software merges road surface information from camera data and LiDAR data. The data is cross-verified to balance the strengths of different sensors and compensate for deficiencies. This enables accurate and reliable detection of the drivable area.

- MF-3: Object Fusion: MF-3 combines object information from semantic camera segmentation, LiDAR, and RADAR data. The spatial consistency of relevant objects is checked, and information from various sources is integrated. The aim is to create a comprehensive, accurate representation of detected objects.

- MF-4: Combined Tracking of Objects: Based on the fusion results of MF-3, this software tracks objects over time (tracking). Different data sources provide speed estimates, which are combined for accurate tracking results. The interplay of various data sources enables precise localization of both static and moving objects.

Our ADFS MentisFusion® products represent an innovative approach to data fusion, offering a robust solution for creating a holistic, precise, and reliable perception for autonomous driving.

Use Cases

Standards

The advanced safety solutions of LAKE FUSION Technologies GmbH are developed to meet the stringent requirements of Level 4 Autonomous Driving (SAE Level 4 approval).

They adhere to the highest safety standards, including ASIL D, in compliance with SOTIF and ISO 26262.